On August 25, 2016, the International Vehicle Networking and Smart Transport Exhibition (MMC 2016) was held in Shanghai. More than 100 companies related to Vehicular Networking, New Energy Vehicles, Smart Motorcycles, and Smart Travel Platforms gathered at the New International Expo Center to discuss “Smart Travel, Connected Lifeâ€.

On the afternoon of the first day of the forum, on the forum entitled “Voice Sound and Sound Activityâ€, several well-known domestic providers of speech interaction programs respectively gave speeches and discussed. Lei Feng network (search "Lei Feng network" public number attention) to extract part of the speaker speech:

Yun Zhisheng Chen Jisheng: understanding people and executing is the keyVoice is in the system-level interactive status in the car. It requires system-level thinking and design: starting from concepts, designing UI/UX, system integration, and finally marketing. The interaction design must grasp the following principles:

Simplicity: The experience must be consistent, consistent with the human mind, and easy to understand.

Useful: Only show the driver is concerned, can help to make a decision, but the right to resolve in the driver itself; can guide the driver for error correction.

Effective: More efficient for deep users.

Safety: The driver will only be interrupted in an emergency situation, while liberating his hands and eyes.

Because of the complex vehicle environment and noise interference, multiple microphone arrays are needed to ensure correct speech recognition; personalized biometric information (identification of men, women, children, and children) is transmitted through the voiceprint to make deeper users more effective.

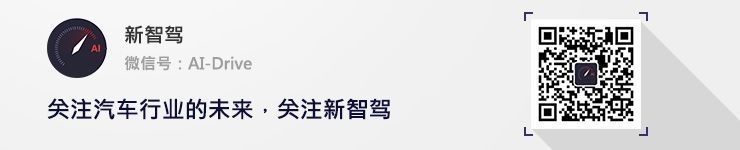

The “pragmatic calculations†that cloud knowledge promotes are actually understanding people’s intentions.

On-board safety is the most important guarantee for travel, and it is necessary to do everything possible to achieve fast voice operations: avoid dazzling technical rounds of dialogue and repeated correction; at the same time, it is necessary to promote wake-free operation on specific occasions.

Pragmatic calculations, returning truly useful information, not just semantics, can convey utterances that are not only identifiable, but also understandable, and can later give action plans.

Kay LiDE Navigation Liu Zhijian: Combination of Speech and High-precision MapsThe three elements of autopilot: perception, maps, and driving strategies—maps and driving strategies both rely on perception and need to be updated. The two are matched and promote each other.

In addition to relying on image recognition, maps play an important role in lane recognition and providing travelable paths . At this stage, voice interaction is the most common way to use intelligent vehicle driving strategies. The voice interaction instruction set must involve the analysis and learning of maps and navigation commands. In the semi-automatic driving scenario in the future, it requires fast and accurate voice. Recognition and lane-level navigation to ensure driving safety.

The various types of voice interactions involved in high-precision map applications include location, display, route planning and induction, and assisted driving.

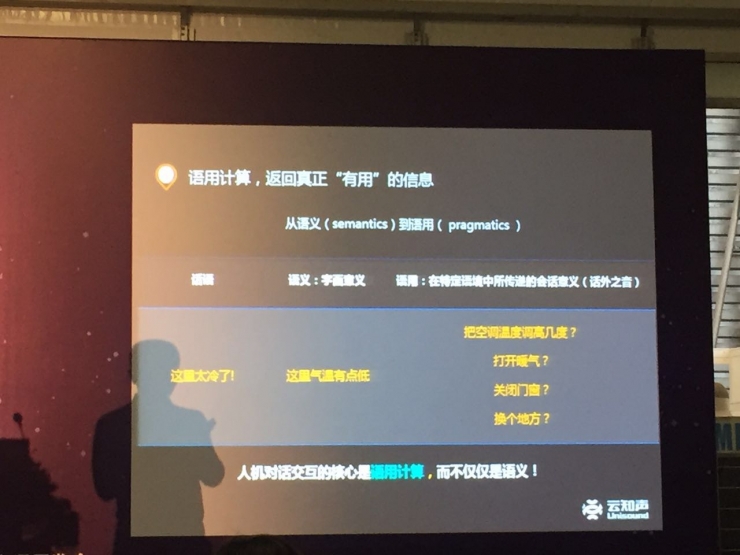

Understand the need for high precision and high speed

High-precision natural semantic understanding:

The understanding of multiple semantics: for example, to Guangzhou South Railway Station, I would like to go along the Yangtze River Expressway, go to the airport without going overhead, etc.; more accurate voice interaction feedback; meet the high-precision positioning of rapid response and beyond sight, more accurate prediction capabilities.

In the future, intelligent network-linked cars, high-precision maps and intelligent voice interaction are two relatively core factors.

Onstar Zhu Jiaming: Emotional Voice InteractionVoice interactions in the car include voice reminders, voice input, voice control, sound effects, music, voice calls, operational feedback, and radio stations.

The user experience of voice interaction in the car is advanced: security (freeing hands, avoiding dispersion) - convenience (enhancing efficiency, reducing learning, timely feedback) - fun (emotional interaction, spiritual enjoyment).

The core interaction of sound in car networking products: The task node and the sound interact with each other.

The choice of targeted interaction

Steward-style emotional scene service experience:

Can provide personalized services and information push based on dynamic scenes and user data; use emotional language to interact with drivers; omnipresent and intimate voice reminders let the experience reach the heart.

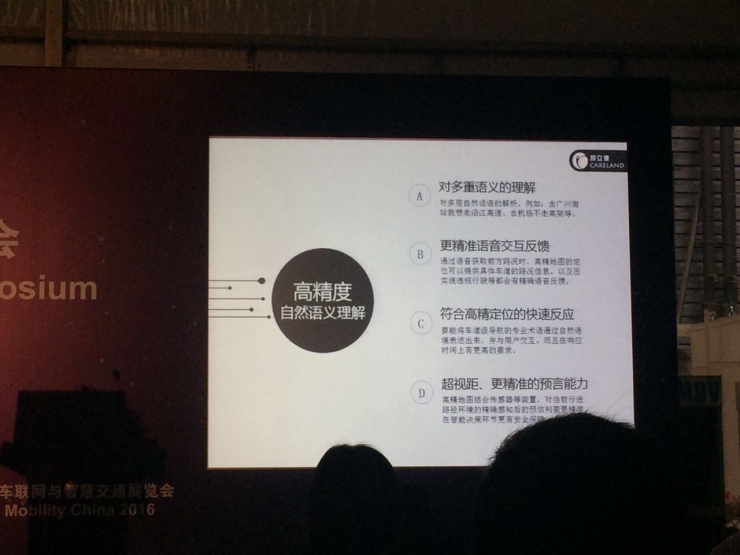

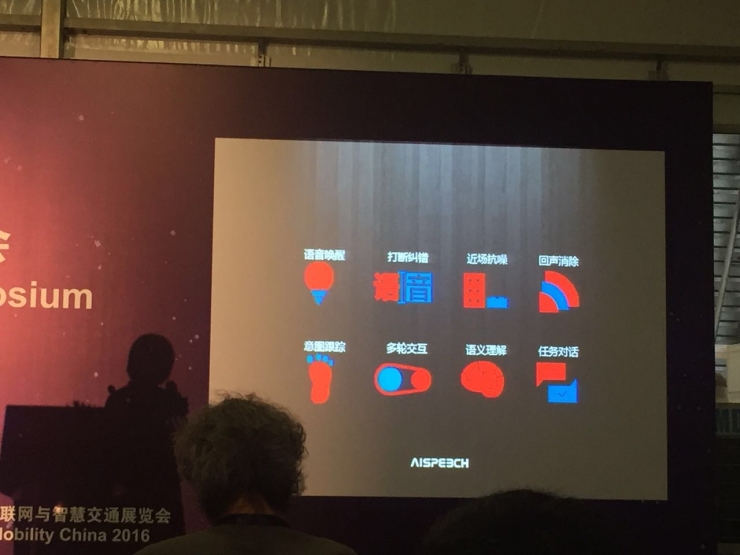

Sibi Chi Dragon Dream Bamboo: The ultimate experience and algorithm is kingShe believes that the current user's pain point is: I am very eager for spoken language interaction, but there are many noise disturbances in the driving environment, and there are few content that meets the scene.

In order to provide a more extreme experience, map navigation, social networking, music library, radio, information search and O2O services can be combined. More details optimization:

Audio station wakes up with voice control, navigation supports docking of all map resources, switching of synthesized sounds (custom sounds), query of road conditions, interruption of cross-domain (direct switching of functions such as navigation, music, WeChat, and telephone) interactive experience upgrade , custom wake-up words (which are no longer unique to the system, such as: Hey, Siri).

Technically solve users' pain point

The current speech interactions are the transition from single modality to multimodal (combination of multiple interaction modes), from passive interaction to active interaction (machine interaction and human interaction).

She thinks that the correct rate of identification in the past is meaningless because it is difficult for users to distinguish. She said that big data is very important at present, but more important is the merits of the algorithm.

Data reveals the prospects of the entire market

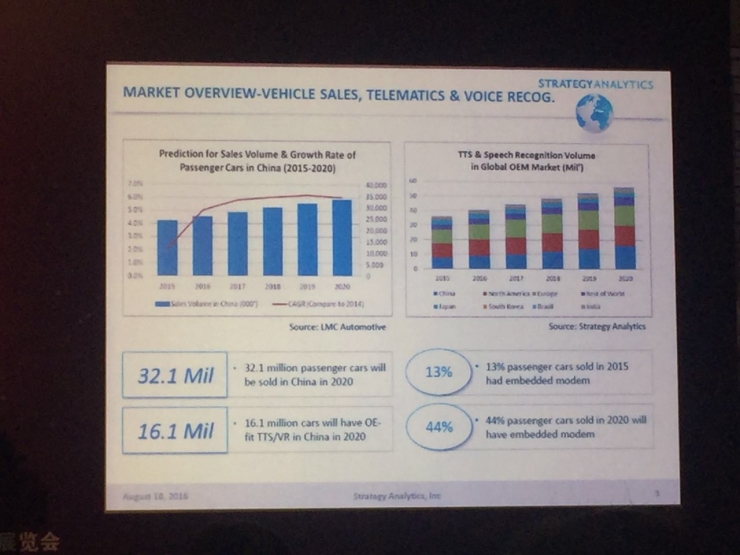

At the meeting, Li Jianyu, Senior Analyst of Global Automotive Industry at Strategy Analytics pointed out that the actual application of car audio technology currently has no high market share.

In 2015, the number of passenger vehicles with relevant telematics embedded modules in the world will account for about 13%, and it will reach 44% in 2020. In China, about 32 million passenger cars will be sold in 2020. Among them, vehicles with related remote control modules and voice recognition modules will account for about 50%. This market is very large in the future.

At present, the application of the voice-activated control technology in the vehicle-mounted system has become an important part of the vehicle networking. The voice interaction gradually replaces the traditional manual control and becomes one of the distinctive features of the intelligent vehicle. Vendors need to continuously improve user experience on the basis of security and make “sound and sound†a reality.

This article is a new intellectual article and welcomes attention to new intellectual driving. Wechat add "New Driver" to subscribe to the public number.