The image is represented using one-dimensional coordinates, and while 2D and 3D are more complex to visualize, I don’t use MATLAB, so the idea can be expressed in a simpler way.

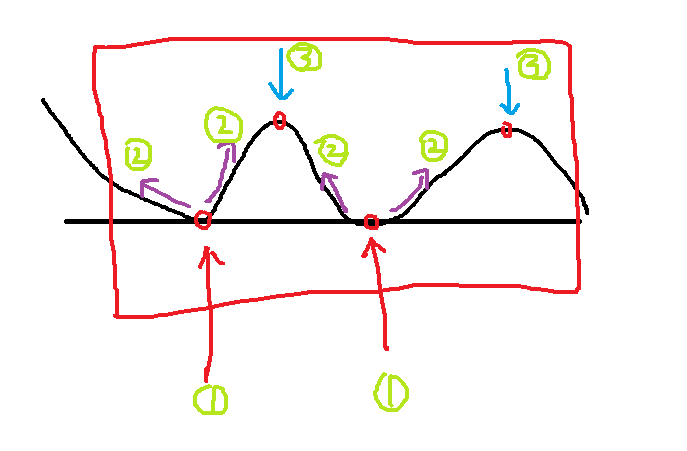

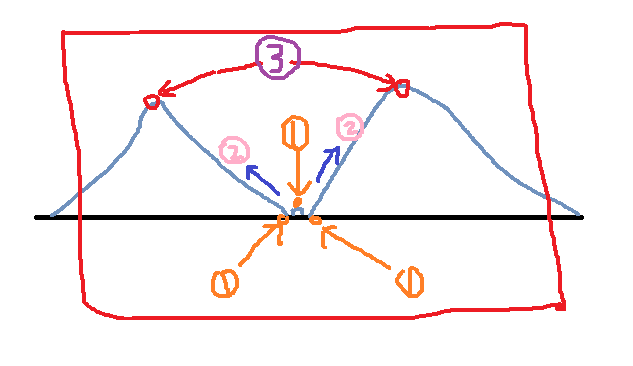

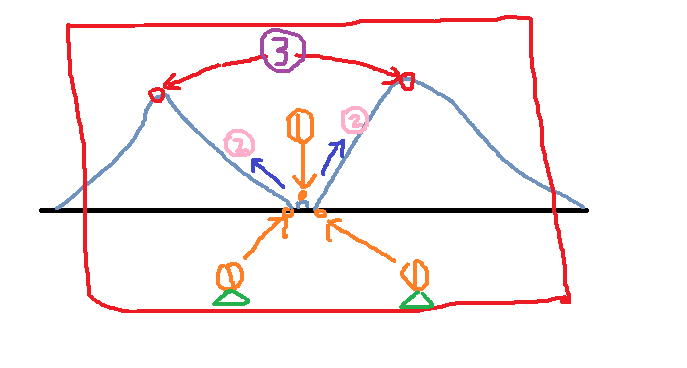

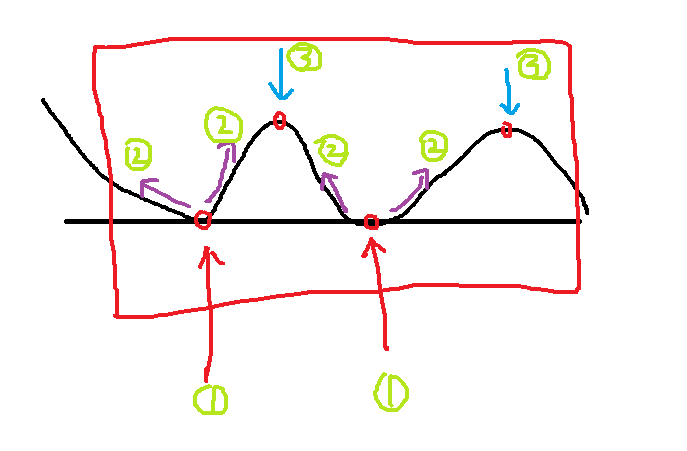

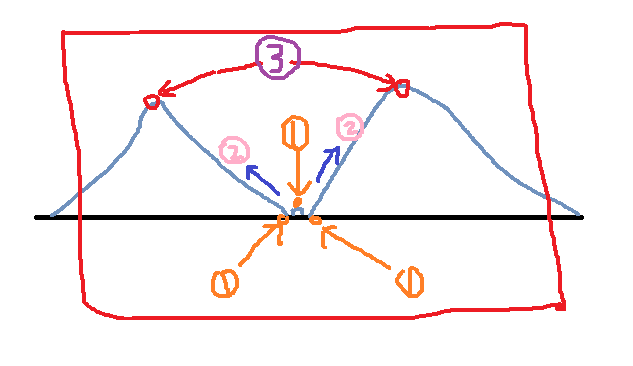

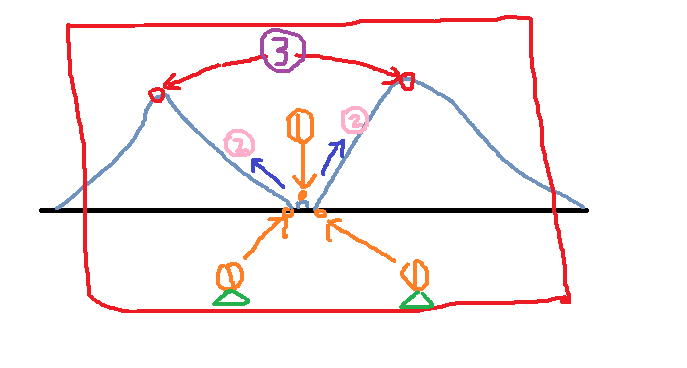

Step 1: Find the local minimum points of the image. There are many methods to do this—either by using a kernel or comparing pixel values directly. It's not difficult to implement.

Step 2: Start the water flooding from the lowest point. The water begins to fill the "basins" (the image is processed using the gradient method). The marked low points will not be submerged, while intermediate points will be filled.

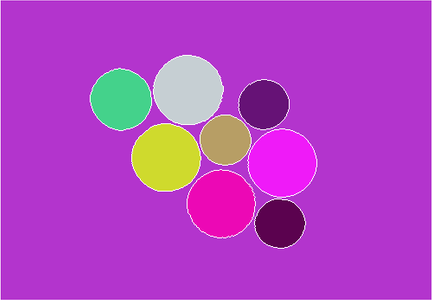

Step 3: Identify the local maximum points, which correspond to the three positions shown in the figure.

Step 4: Use the local minima and maxima found to segment the image.

#include

using namespace cv;

using namespace std;

void waterSegment(InputArray& _src, OutputArray& _dst, int& noOfSegment);

int main(int argc, char** argv) {

Mat inputImage = imread("coins.jpg");

assert(!inputImage.data);

Mat grayImage, outputImage;

int offSegment;

waterSegment(inputImage, outputImage, offSegment);

waitKey(0);

return 0;

}

void waterSegment(InputArray& _src, OutputArray& _dst, int& noOfSegment) {

Mat src = _src.getMat();

Mat grayImage;

cvtColor(src, grayImage, CV_BGR2GRAY);

threshold(grayImage, grayImage, 0, 255, THRESH_BINARY | THRESH_OTSU);

Mat kernel = getStructuringElement(MORPH_RECT, Size(9, 9), Point(-1, -1));

morphologyEx(grayImage, grayImage, MORPH_CLOSE, kernel);

distanceTransform(grayImage, grayImage, DIST_L2, DIST_MASK_3, 5);

normalize(grayImage, grayImage, 0, 1, NORM_MINMAX);

grayImage.convertTo(grayImage, CV_8UC1);

threshold(grayImage, grayImage, 0, 255, THRESH_BINARY | THRESH_OTSU);

morphologyEx(grayImage, grayImage, MORPH_CLOSE, kernel);

vector> contours;

vector hierarchy;

Mat showImage = Mat::zeros(grayImage.size(), CV_32SC1);

findContours(grayImage, contours, hierarchy, RETR_TREE, CHAIN_APPROX_SIMPLE, Point(-1, -1));

for (size_t i = 0; i < contours.size(); i++) {

drawContours(showImage, contours, static_cast(i), Scalar::all(static_cast(i + 1)), 2);

}

Mat k = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));

morphologyEx(src, src, MORPH_ERODE, k);

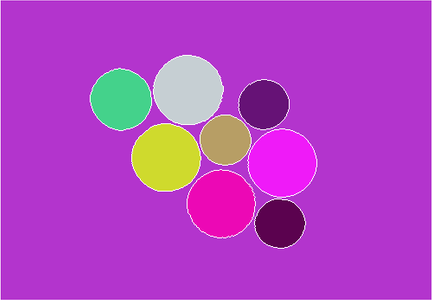

watershed(src, showImage);

vector Colors;

for (size_t i = 0; i < contours.size(); i++) {

int r = theRNG().uniform(0, 255);

int g = theRNG().uniform(0, 255);

int b = theRNG().uniform(0, 255);

Colors.push_back(Vec3b((uchar)b, (uchar)g, (uchar)r));

}

Mat dst = Mat::zeros(showImage.size(), CV_8UC3);

int index = 0;

for (int row = 0; row < showImage.rows; row++) {

for (int col = 0; col < showImage.cols; col++) {

index = showImage.at(row, col);

if (index > 0 && index <= contours.size()) {

dst.at(row, col) = Colors[index - 1];

} else if (index == -1) {

dst.at(row, col) = Vec3b(255, 255, 255);

} else {

dst.at(row, col) = Vec3b(0, 0, 0);

}

}

}

_dst.assign(dst);

}

```

Samples;

int newNumSeg = numSeg;

for (size_t i = 0; i < newNumSeg; i++) {

Mat sample;

Samples.push_back(sample);

}

for (size_t i = 0; i < segments.rows; i++) {

for (size_t j = 0; j < segments.cols; j++) {

int index = segments.at(i, j);

if (index >= 0 && index <= newNumSeg) {

if (!Samples[index].data) {

Samples[index] = image(Rect(j, i, 1, 1));

} else {

vconcat(Samples[index], image(Rect(j, i, 2, 1)), Samples[index]);

}

}

}

}

vector Hist_bases;

Mat hsv_base;

int h_bins = 35;

int s_bins = 30;

int histSize[2] = { h_bins, s_bins };

float h_range[2] = { 0, 256 };

float s_range[2] = { 0, 180 };

const float* range[2] = { h_range, s_range };

int channels[2] = { 0, 1 };

Mat hist_base;

for (size_t i = 1; i < numSeg; i++) {

if (Samples[i].dims > 0) {

cvtColor(Samples[i], hsv_base, CV_BGR2HSV);

calcHist(&hsv_base, 1, channels, Mat(), hist_base, 2, histSize, range);

normalize(hist_base, hist_base, 0, 1, NORM_MINMAX);

Hist_bases.push_back(hist_base);

} else {

Hist_bases.push_back(Mat());

}

}

double similarity = 0;

vector Merged(Hist_bases.size(), false);

for (size_t i = 0; i < Hist_bases.size(); i++) {

for (size_t j = i + 1; j < Hist_bases.size(); j++) {

if (!Merged[j]) {

if (Hist_bases[i].dims > 0 && Hist_bases[j].dims > 0) {

similarity = compareHist(Hist_bases[i], Hist_bases[j], HISTCMP_BHATTACHARYYA);

if (similarity > 0.8) {

Merged[j] = true;

if (i != j) {

newNumSeg--;

for (size_t p = 0; p < segments.rows; p++) {

for (size_t k = 0; k < segments.cols; k++) {

int index = segments.at(p, k);

if (index == j) segments.at(p, k) = i;

}

}

}

}

}

}

}

}

numSeg = newNumSeg;

}

```

For Pixel Glass,Pixel Glass,Google Pixel 4 Xl Glass,Google Pixel 6 Pro Front Glass

Dongguan Jili Electronic Technology Co., Ltd. , https://www.jlglassoca.com