1 Development and characteristics of MPEG

1.1 MPEG-1

Before the advent of MPEG, there were already two standards for image compression, namely JPEG for still image data compression and H.261 for video compression of videophones and conference TVs, but they were not related to computer data standards. This requires the establishment of a unified standard for computer systems and radio and television in the four areas of image, sound, storage and transmission, which is conducive to a wide range of media exchange, so MPEG came into being.

The basic task completed by the MPEG-1 standard is that image (including sound) data of appropriate quality must become a type of computer data, and it is compatible with existing data (such as text, drawing, etc.) in the computer, and these data must be present Some computer networks and radio and television communication networks are compatible for transmission. The MPEG-1 standard has three components: MPEG video, MPEG audio, and MPEG system. Therefore, the problems involved in MPEG are the composite and synchronization of video compression, audio compression, and multiple compressed data streams. The MPEG-1 standard is a coding standard suitable for transmitting digital storage media dynamic images and their accompanying sounds at a data transmission rate of 1.5Mbps. It can handle various types of moving images. Its basic algorithm is to compress 360 pixels horizontally and vertically. The spatial resolution of 288 pixels and the moving images of 24 to 30 frames per second have a very good effect. Unlike JPEG, it does not define the detailed algorithm required to generate a legal data stream, but provides a lot of flexibility in the design of the encoder. In addition, a series of parameters that define the encoded bit stream and decoder are included in the bit stream itself Among them, these features allow the algorithm to be used for images of different sizes and width ratios, as well as channels and devices with a wide range of operating rates.

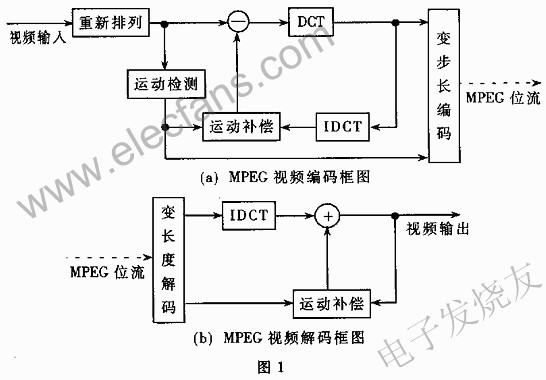

MPEG-1 standard compression first sub-samples the color difference signal to reduce the amount of data, uses motion compensation technology, reduces inter-frame redundancy, uses a two-dimensional DCT transform cloud to remove spatial correlation, quantizes the DCT component, and discarding is not important After the quantized DCT components are newly sorted according to frequency, the DCT components are encoded with variable word length, and finally the DC components (DC) of each data block are predicted differentially encoded. The block diagram of MPEG video encoding and decoding is shown in Figure 1.

1.2 MPEG-2

The MPEG-2 standard is called "the encoding of moving images and their accompanying sounds", which is mainly aimed at the video and sound signals required by high-definition television (HDTV), and the transmission rate is 10Mbps.

The MPEG-2 standard is divided into eight parts, collectively called the ISO / IEC1318 international standard. The first part: the system, describing multiple video, audio and data basic code streams to synthesize the transmission code stream and the program code stream; the second part: video, describing the video encoding method; the third part: audio, describing and MPEG-1 audio Standard backward compatible audio coding method; Part 4: Conformance test, describing the software implementation method to test whether an encoded code stream complies with the first, second, and third parts of the MPEG-2 standard; Part V: Digital memory body-command And control, describing the session signaling set between the server and the user in an interactive multimedia network; part VI; non-backward compatible audio, specifying multi-channel audio coding that is not backward compatible with MPEG-1 audio; part VII: 10-bit video is now discontinued; Part VIII: Real-time interface, which specifies the real-time interface for transmitting code streams.

The MPEG-2 video coding standard is a series divided by grades, divided into 4 "levels" according to the resolution of the coded image: low level (LL: low level), the pixels of the input signal are a quarter of the ITU-R601 format; The main level (ML: main level), the pixel of the input signal is ITU-R601; the high-level 1440 (H14L: high-1440 level) is a 4: 3 mode high-definition TV format; the high-level (HL: high level) is 16: High-definition format for 9-mode TV. According to the set of coding tools used, it is divided into 5 "classes": simple profile (SP: simple profile), only the reference frame I and the predicted frame P; main category (MP: main profile), which adds bidirectional speculation frame B to SP ; Signal-to-noise ratio hierarchical class (SNRP: SNR scalable profile); spatially hierarchical class (SSP: spaTIal scalable profile); high class (HP: high profile). Several combinations of "level" and "class" constitute a subset of the MPEG-2 video coding standard under certain specific applications. For an image of an input format, a specific set of compression encoding tools is used to generate an encoded code stream within a specified rate range. The code stream of MPEG-2 is divided into 6 levels. From top to bottom are: video sequence layer (Sequence); picture group layer (GOP: GroupofPicture); picture layer (Picture); slice layer (Slice); macroblock layer (MacroBlock) and block layer (Block).

MPEG-2 encoding process: In the case of intra-frame encoding, the encoded image only undergoes DCT, and the quantizer and bitstream encoder generate the encoded bitstream without going through the prediction loop process. DCT is directly applied to the original image data. In the case of inter-frame coding, the original image is first compared with the predicted image in the frame memory to calculate a motion vector, from which the predicted image of the original image is generated from the motion vector and the reference frame. Then, the difference image data generated by the difference between the original image and the predicted pixel is subjected to DCT transformation, and then the output encoded bit stream is generated through a quantizer and a bit stream encoder.

1.3 MPEG-4

The goal of the MPEG-4 standard is to support multiple multimedia applications (mainly focusing on access to multimedia information content), and the decoder can be configured on site according to different requirements of the application. MPEG-4 aims to provide a flexible framework and an open set of coding tools for the communication, access and management of video (audio) data.

In the MPEG-4 image and video standard, the goal of video representation tools is to provide standardized core technologies for the effective storage, transmission, and management of texture, image, and video data in a multimedia environment. Particular emphasis is placed on the ability of these tools to encode and decode atomic units of images and video content (called video objects VO). Effectively represent video objects of any shape to support the so-called content-based feature set. This feature set supports the separate encoding and decoding of content (that is, the physical object VO in the scene). This feature provides powerful underlying mechanism support for interactivity, and also provides flexibility for VO content of images or videos in the compression domain. Presentation and management provide favorable conditions. The MPEG-4 image and video standards uniformly support the encoding and decoding of traditional rectangular and arbitrary shape images and videos. For content-based applications, the input image sequence may have any shape and position. The shape can be represented by an 8-bit transparent component (when a VO is composed of multiple other objects) or by a binary mask. In addition, by using appropriate and fine object-based motion prediction tools for each physics in the scene, the compression ratio of certain video sequences can be greatly improved. For MPEG-4 extended content-based encoding can be regarded as a logical extension of the traditional VLBV kernel or HBV tool from rectangular input to arbitrary shape input. In this sense, content-based encoding is a superset of VLBV and HBV cores.

The MPEG-4 standard adds seven new functions on the original basis. Features of each added function:

(1) Content-based operations and bitstream editing support Content-based operations and bitstream editing can be performed without coding. (2) Mixed encoding of natural and synthetic data. Provides a way to effectively combine natural video images with synthesized data (text, graphics), while supporting interactive operations. (3) Enhanced random access in the time domain. MPEG-4 will provide an effective random access method: within a limited time interval, you can randomly access an audio and video sequence according to frames or objects of any shape. (4) Improve coding efficiency. At a rate comparable to existing standards being formed, the MPEG-4 standard will provide images with better subjective visual quality. (5) Encoding multiple concurrent data streams. MPEG-4 will provide effective multi-view encoding of a scene, plus multi-sound channel encoding and effective audio-visual synchronization. In terms of stereoscopic video applications, MPEG-4 will use the information redundancy caused by the multi-view observation of the same scene to effectively describe the three-dimensional natural scene under the conditions of sufficient observation viewpoints. (6) Error-resistance in error-prone environments "Flexible and diverse" refers to the use of various cable networks and various storage media. MPEG-4 will improve error resistance, especially in low error-prone environments Bit application (mobile communication link). MPEG-4 is the first standard to consider channel characteristics in its audio and video representation specifications. The purpose is not to replace the error control technology already provided by the communication network, but to provide a resilience against residual errors. (7) Scale variability based on content. Content scale variability means assigning priority to each object in the image. Content-based scale variability is the core of MPEG-4, because once the directory of objects contained in the image and the corresponding priority are determined, other functions of collecting content are relatively easy to implement. For very low bit rate applications, variable scale forms a key factor because it provides the ability to adapt available resources.

The above seven new functions can be grouped into three categories: content-based interactivity, high compression ratio, and flexible and diverse access modes. The first three functions are content-based interactivity, four and five are high compression ratio modes, and the last two are flexible and diverse access modes.

1.4 MPEG-7

The MPEG-7 standard is called "Multimedia Content Description Interface", and it will expand the limited capabilities of existing dedicated content recognition solutions, especially including more data types. In other words, MPEG-7 will specify a standard set of descriptors for describing various types of multimedia information.

MPEG-7 standardizes the methods for defining other descriptors and their structures (description schemes) and their relationships. This description (that is, a combination of descriptors and description schemes) will be associated with the content itself, so as to quickly and efficiently search for materials that are of interest to users. MPEG-7 standardizes a language used to define description schemes, namely the description definition language (DDL). Together with the related MPEG-7 data, the AV material can be indexed and searchable.

MPEG-7, like other members of the MPEG family, is a standardized expression for audio and video information that meets specific needs. MPEG-7 descriptors do not depend on how the described content is encoded or stored. You can use MPEG- The description of 7 is attached to an analog film or a picture printed on paper. However, although the MPEG-7 description does not depend on the (encoding) representation of the processed material, it is developed on the basis of MPEG-4 to a certain extent, and MPEG-4 adopts a certain time relationship according to The processing method of audio and video coding is based on the objects with spatial relationship. Therefore, it is possible to attach the description to the members (objects) in the scene with MPEG-4 coding. Therefore, MPEG-7 needs to provide different degrees in the description to achieve different levels of recognition.

Because the description feature must be meaningful in the application environment, it will be different depending on the scope of the user and the application field. This means that the same material may be described using different types of features because it matches the scope of application. Of course, all these descriptions will be coded in an efficient manner, and performance can improve the efficiency of the search. At the same time, there may be a transitional level of abstraction in the middle. The level of abstraction is related to the way of extracting features. Many low-level features can be extracted in a fully automatic way, while high-level features require more human interaction.

2 Future prospects of MPEG

MPEG video compression system is a complex integrated system with high technology content, and only a few extremely powerful companies in the world can launch commercial products. Because the technology of MPEG video compression system is complicated and the equipment is expensive, so far its popularity is very low. However, with the advancement of technology, the maturity of the process and the decline in price, its application is expanding. Things that used to cost millions of dollars to accomplish in the past can now be achieved with tens of thousands of dollars. If you already have such an MPEG video compression system, you can easily compress and store videos, photos, pictures, movies and other programs in your computer for life video, file management and other video production.

MPEG has formulated a series of standards. In fact, in many cases no specific implementation is given, and the final implementation will also be achieved by various manufacturers and R & D personnel. The research of MPEG mainly focuses on two aspects: (1) research on the realization of MPEG; (2) further research on image compression methods has obtained a larger data compression ratio and realized the function of human-machine dialogue.

From the current MPGE standard, the author believes that it will mainly focus on object-based processing methods, that is, for different data, content, and requirements, different methods will be selected according to the situation. First of all, this is the most basic requirement for meeting human-machine dialogues, but also for people-oriented purposes. Everyone can request different processing methods according to their own needs. Secondly, this is the requirement for further obtaining a larger image data compression ratio. In the past, compression methods based on the data itself and its exchange and statistical personality were difficult to meet the speed of data flow on the highway. However, if the object-based processing method is realistic, the model-based compression method can use different compression for different objects (contents) Method, so as to obtain a huge compression ratio, and meet human visual requirements. This problem has been noted in the MPEG-4 and MPEG-7 standards, and even the study of objects or content is introduced. Therefore, the author believes that object-based image processing methods will be the future development direction of MPEG.

MPGE video compression technology and VCD production have opened up a new development path for us. The popularization and application of MPEG video compression technology may produce a new industry, namely multimedia production. The market in this area has just started, and it is almost blank in education and training. It is an industry with great development potential and needs to be developed. The future is an information society. The transmission and storage of various multimedia data is the basic problem of information processing. This article only elaborated from the MPEG standard. There are many technologies in this area that need to be researched and developed. I hope to be interested in The people in this study discussed together.

Transformer For Renewable Energy

Transformer For Renewable Energy, Solar Transformer,Wind Mill Transformer, Energy Station

Hangzhou Qiantang River Electric Group Co., Ltd.(QRE) , https://www.qretransformer.com