In recent years, with the improvement of machine learning technology, computers have the functions of recognizing objects in images, "listening" to understand voice commands, and translating text information.

However, although Apple's Siri or Google Translate can execute commands in real time, in fact, if traditional computers are to complete the complex mathematical modeling that these tools rely on, it will not only consume a lot of time and energy, but also require better processing power. As a result, startups like Intel, Image Power Plant Nvidia, Mobile Computing Qualcomm, and many chip-making startups are racing to develop specialized hardware that will make modern deep learning technology less expensive and more efficient.

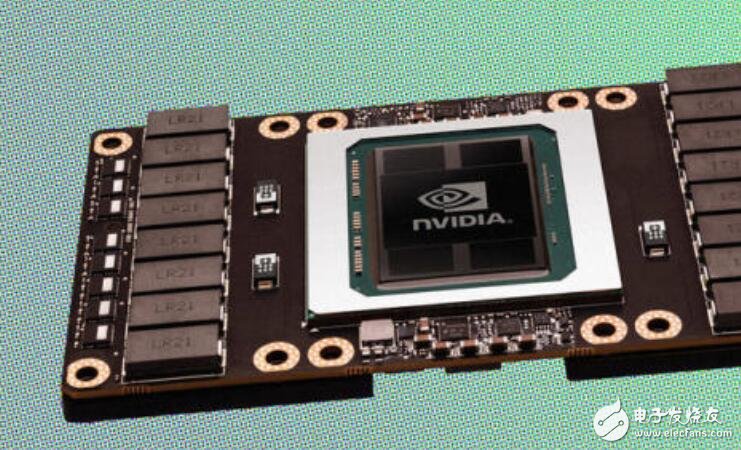

Figure: Nvidia's Tesla P100 GPU was developed for high-performance data center applications and artificial intelligence (AI).

Some artificial intelligence (AI) researchers say that people may not understand the importance of these chips designed to improve the speed of developing and writing new AI algorithms. Nvidia’s CEO, Huang Renxun, said in a financial conference call in November that he would train the computer to perform a new task, saying, “(the time required) is certainly not a few months, maybe a few days,†he said. Said, "In essence, you have a time machine."

While Nvidia's work is broadly developed to help gamers play the latest shooter graphics cards with the highest possible resolution, the company also places emphasis on adapting its image processing unit chips or GPUs to the serious scientific computing and data center digital processing.

Ian Buck, vice president and general manager of Nvidia's Accelerated Computing business unit, said, "In the past 10 years, we have used GPU technology that was previously only used in graphics to more and more common areas."

The fast drawing of images of video games and other factual images relies on GPU technology that is capable of performing a particular type of mathematical calculation (eg, matrix multiplication) and that can process a large number of basic computations simultaneously. The researchers found that these same features are equally useful for other applications with similar algorithms, including the operation of climate simulations and the modeling of complex biomolecular structures.

Recently, GPUs have proven that they are good at training deep neural networks. The network is like a mathematical structure loosely built into the human brain and is the main force of modern machine learning technology. At the same time, GPUs also rely heavily on repeated parallel matrix calculations.

"Deep learning technology has become special: it requires a lot of intensive matrix multiplication," said Naveen Rao, vice president and general manager of Intel's artificial intelligence solutions. Rao is also the founder and CEO of a machine learning startup, Nervana Systems, which was acquired by Intel earlier this year. “This (deep learning technology) is different from the workload that only supports word processing programs or spreadsheets.â€

The similarities between graphics processing and artificial intelligence in mathematical operations have made Nvidia a leader in competitors. The company reported that data center revenues more than doubled year-on-year to $240 million in the third quarter ended Oct. 31, a large part of this increase was due to increased demand for deep learning technology. Other GPU manufacturers are also likely to be excited about emerging product requirements. Because of previous reports, in the context of declining desktop sales, GPU sales have also decreased. Today, Nvidia dominates the GPU market with over 70% market share. In the past year, with the birth of its new chip application, its stock market price has almost tripled.

Graphics card helps AI to succeed

In the 2012 ImageNet large-scale visual identity challenge (a well-known image classification competition), a team applied GPU-driven deep learning for the first time and won the competition, and its results clearly exceeded the previous years' awards. By. "What they did was increase the accuracy of the card to within 70% accuracy to 85% accuracy," Buck said.

The GPU system has become a standard when the data center provides services to companies in the machine learning field. Nvidia said that their GPUs have been used in machine learning cloud services provided by Amazon and Microsoft. But Nvidia and other companies are still working on next-generation chips, hoping to train both deep learning systems and use them for more efficient information processing.

Finally, Nigel Toon, CEO of Graphcore, a machine learning hardware startup based in Bristol, UK, said the underlying design of existing GPUs is suitable for image processing rather than artificial intelligence. He believes that the limitations of GPUs have led programmers to combine data in a specific way to make the most efficient use of the chip. But for more complex data, such as recorded video or audio, it may not be easy. Graphcore is developing a chip called "Intelligent Processing Unit", which Toon says is a chip designed specifically for deep learning. He said: "I hope we can get rid of those limitations."

Chipmakers believe that machine learning will benefit from a dedicated processor with fast connections between parallel load computing cores, enabling fast access to large-capacity memories that store complex models, data, and mathematical operations that exceed accuracy. Google’s AlphaGo, which was released in May and defeated World Champion Li Shishi earlier this year, was driven by what they called the “tensor processing unit†of custom chips. Intel announced in November that it hopes to use non-GPU chips based on technology acquired from Nervana in the next three years to achieve a machine learning model that is 100 times faster than today's GPU chips and enables new and more complex algorithms. .

Rao said, "Many of the neural network solutions we've seen so far have artificial components in their hardware design." Due to limitations in memory capacity and processing speed, these artificial components may have limitations on complexity. .

Intel and many of its competitors are preparing for the future of training and developing machine learning models for portable hardware rather than data centers. Aditya Kaul, head of research at market intelligence firm TracTIca, says this is critical for devices like autonomous vehicles that need to react quickly to what's happening around them and can pass new incoming data to the cloud. Learn from these data quickly.

“Over time, you will see a shift from the cloud to the end,†he said. This means that small, energy-efficient computing needs to optimize machine learning, especially for portable devices.

"When you wear headphones, you definitely don't want to tie a brick-like battery to your head or belt," said Jack Dashwood, director of marketing at Movidius, a machine learning startup in San Mateo, Calif. The company, which was acquired by Intel in September, provides a computer vision center processor for a variety of devices, including the DJI UAV.

Nvidia also intends to continue to release GPUs to improve the level of some machine learning-friendly features, such as fast and low-precision algorithms, and to increase support for AI platforms such as the next-generation applications for self-driving cars. Electric car manufacturer Tesla announced in October that it will be equipped with Nvidia hardware in its autopilot computing system for all cars to support its neural network to efficiently process camera and radar data. Nvidia also recently announced plans to provide hardware to cooperative actions by the National Cancer Institute and the Department of Energy, a study of cancer and its underlying therapies under the federal Cancer Moonshot project.

Kaul said: "Nvidia has long discovered the trend of machine learning, which will make them very advantageous in the future innovation."

A manual pulse generator (MPG) is a device normally associated with computer numerically controlled machinery or other devices involved in positioning. It usually consists of a rotating knob that generates electrical pulses that are sent to an equipment controller. The controller will then move the piece of equipment a predetermined distance for each pulse.

The CNC handheld controller MPG Pendant with x1, x10, x100 selectable. It is equipped with our popular machined MPG unit, 4,5,6 axis and scale selector, emergency stop and reset button.

Manual Pulse Generator,Handwheel MPG CNC,Electric Pulse Generator,Signal Pulse Generator

Jilin Lander Intelligent Technology Co., Ltd , https://www.jilinlandertech.com